During the creation of Episode 6 of Play History, I launched deep into the subject of computer monitors. In addition to hosting many computer games, the early computer monitors served as a bridge between the timesharing model of personal computing and the microcomputer revolution in the mid-1970s. This woefully underlooked period sent me wildly off-course in my research as I came to the realization that terminals were deeply important to the evolution of computer technology.

This will go a bit off course from the normal game talk, but we will run into a few forgotten games along the way. Let me describe how the home computer revolution was almost on “the cloud,” and why it ultimately wasn’t.

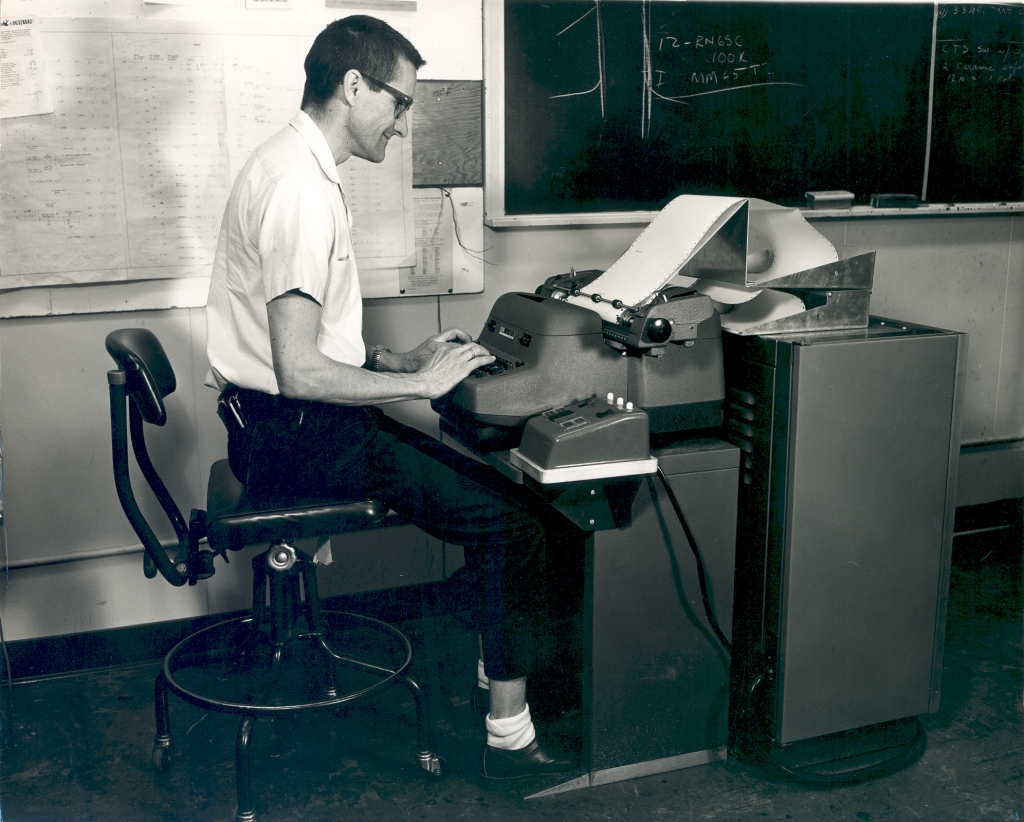

Terminals for computers encompass input/output devices that predominantly feature a keyboard, constituting the majority of computer outputs (past the very early days). At the beginning, terminals were usually text-based teletypes, originally developed to facilitate message transfer along the telegraph network. Once they came into broad usage on computers in the 1950s, programming became significantly easier. Outputs could be printed to individual consoles, which was the first gateway into personal computing as a concept.

Project Whirlwind changed computing in innumerable ways, but two of the most important things it introduced were the real-time display and the first prototypes of timesharing on computers. With this came the beginnings of computer monitors. These were initially built for specific models of computer; there was no standardized language for terminals and little concept of computers being parceled out to large numbers of people. The display systems seen on the likes of the PDP-1 were never going to become widespread.

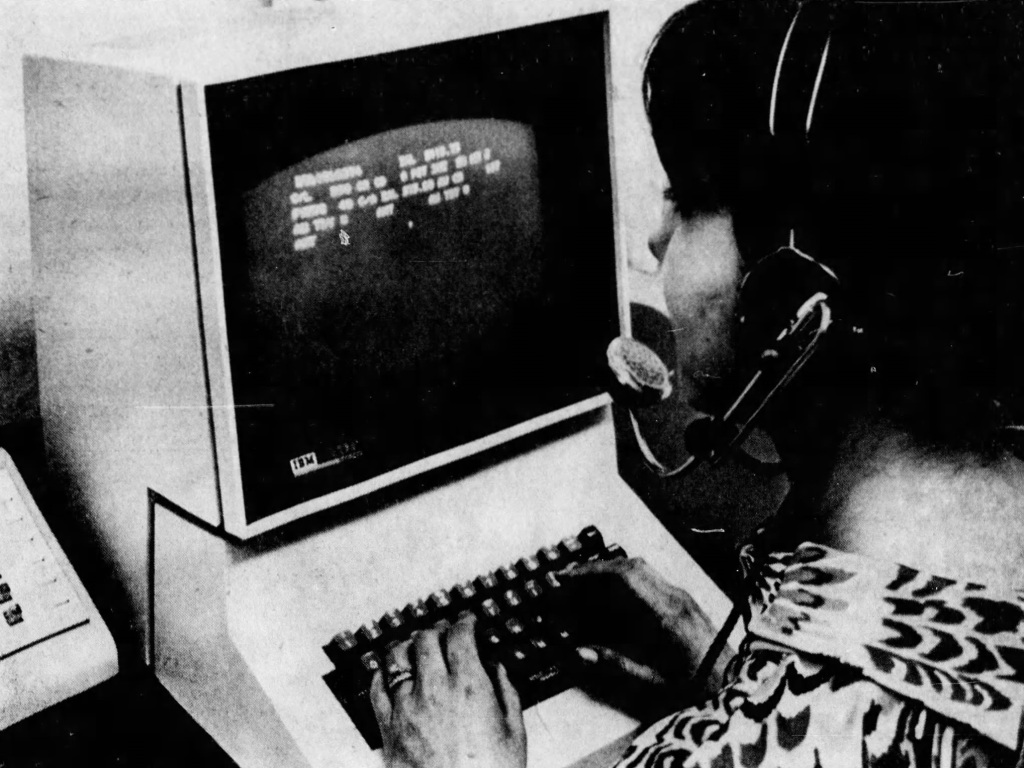

Two things propelled the monitor industry into being. First was the adoption of the ASCII computer text standard, which provided a code by which characters could travel digital space. Then the widespread adoption of timesharing with the Dartmouth model provided an incentive for the purchasing of terminal stations. While the majority of these were teletypes, demand for CRT-based terminals created the first wave of computer monitor products. The most popular early model by far was the IBM 2260, a no-nonsense text display that bypassed the limitations of ink and paper, introducing business clients to the world of computer displays.

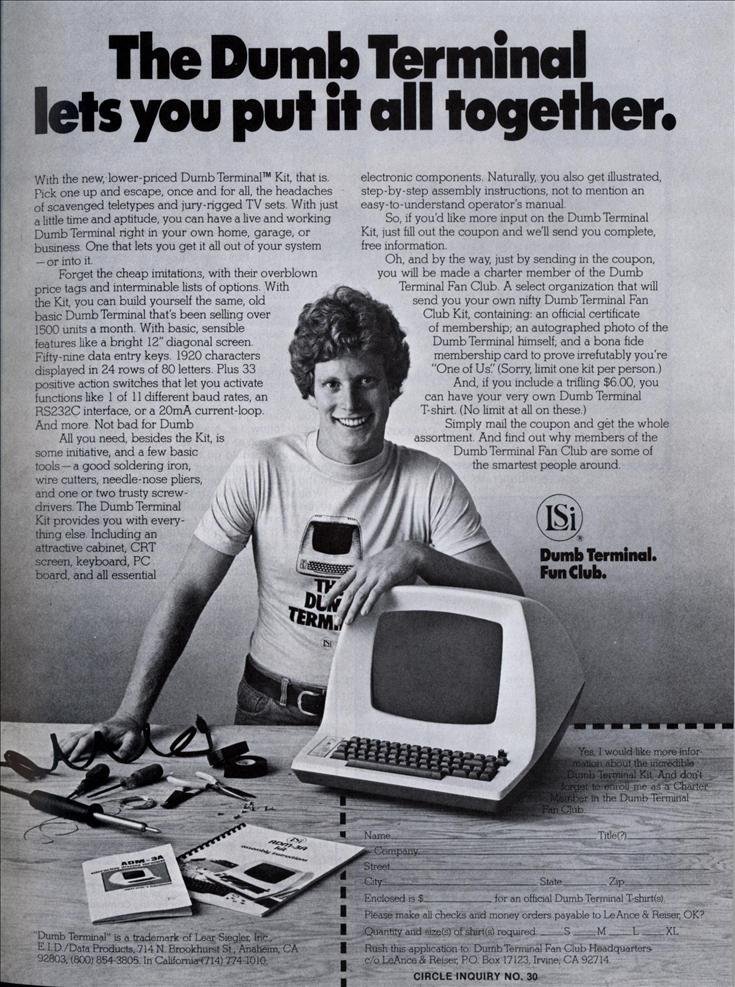

These graphical displays were works of advanced engineering, but they were entirely dependent on their connection to the timesharing computers. Retroactively, we call these “dumb terminals” because they lack a “brain” to function when detached, but initially there was no distinction. Monitors were peripheral devices, provided with just enough electronic magic to render either vector or raster graphics with preset graphics in what was called a character generator. This helped bypass restrictions in memory and bring the displays closer to teletypes – restricting the types of graphics they could output.

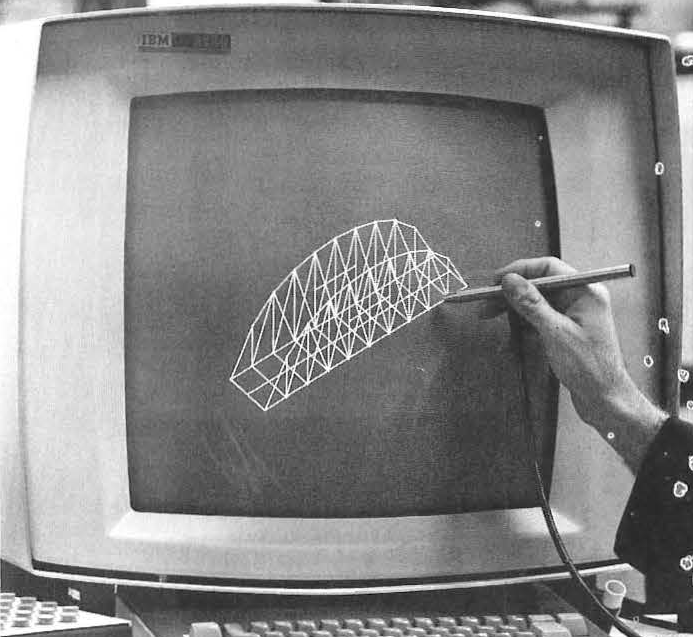

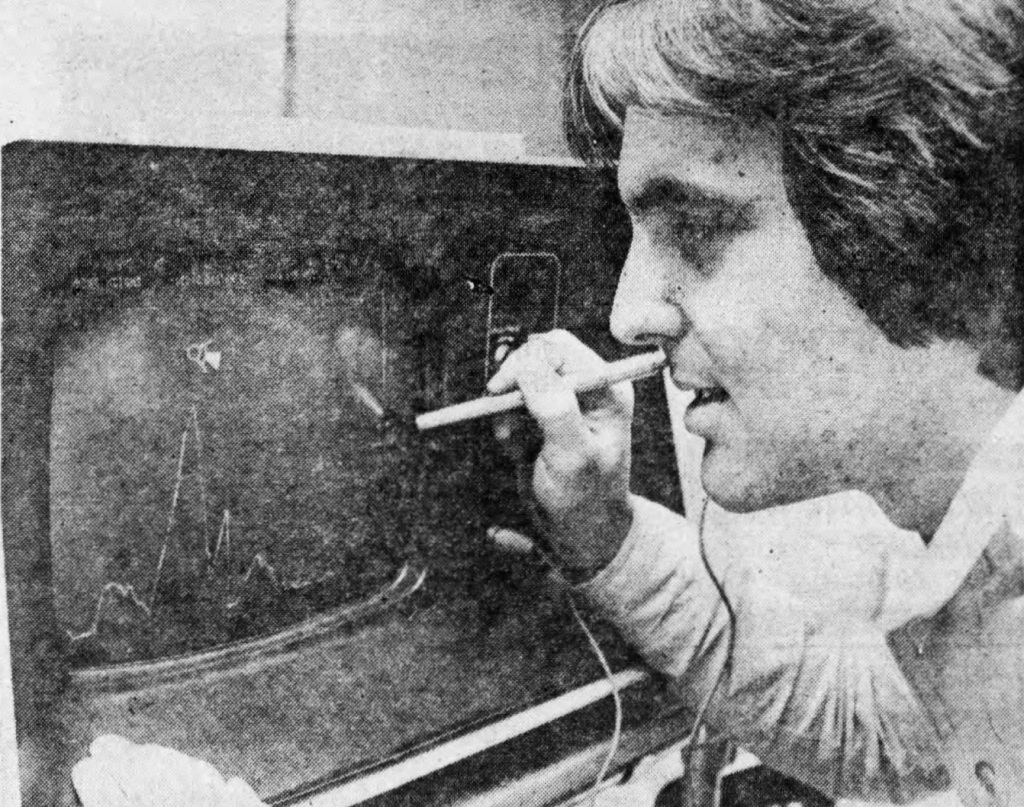

Parallel to the CRT terminals substituting teletypes was also the development of graphical terminals for applications like Computer Aided Design (CAD). These machines also necessitated a connection to a mainframe to work, though their functionality was far more specific to each individual monitor in the brand new field of computer graphics. These early experiments in the mid-1960s proved that computers had the capability to display virtually anything. Experiments at Bell Labs showed that – with enough memory – an arbitrary raster graphics display could be output to a CRT. The work of Ivan Sutherland showed that shapes in vector was merely a matter of math, if stretching the limits of what many mainframes could do.

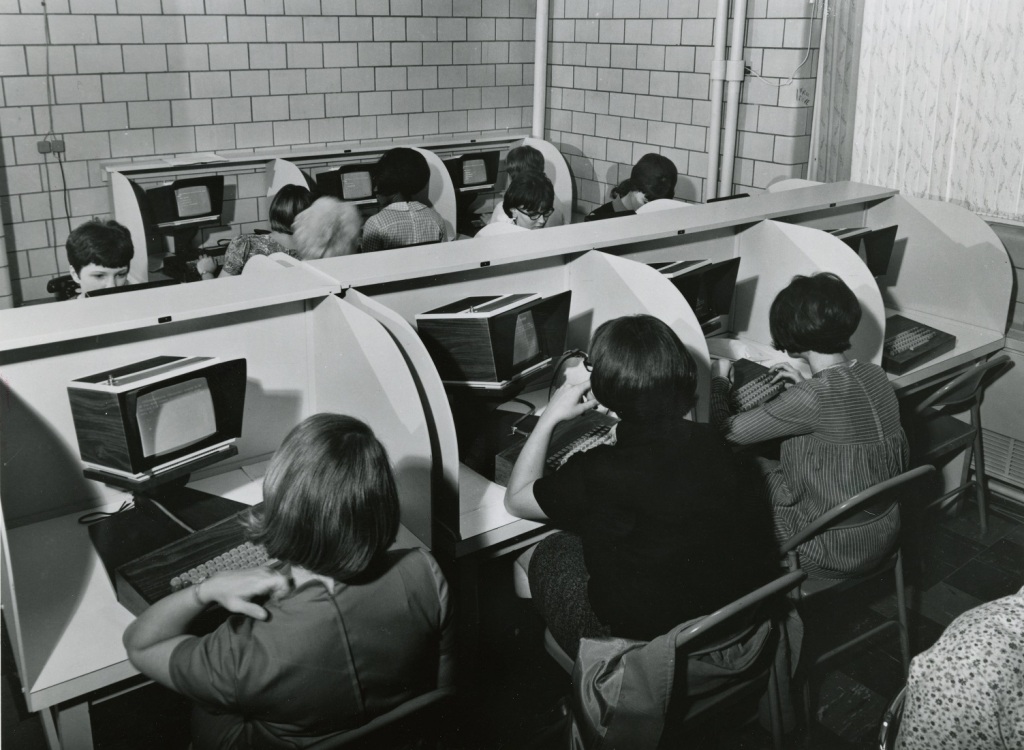

There was one substantial limitation to both terminal types: Most computers were not able to independently handle an excess of peripheral devices. Even projects like PLATO which had broad ambitions of linking terminals was limited to twenty stations in its PLATO III incarnation. From this bottleneck, some designers started to conceive of a terminal with its own “brain.” This would bypass the computer’s need to constantly address the system as well as untether the terminal from often unstable connectivity.

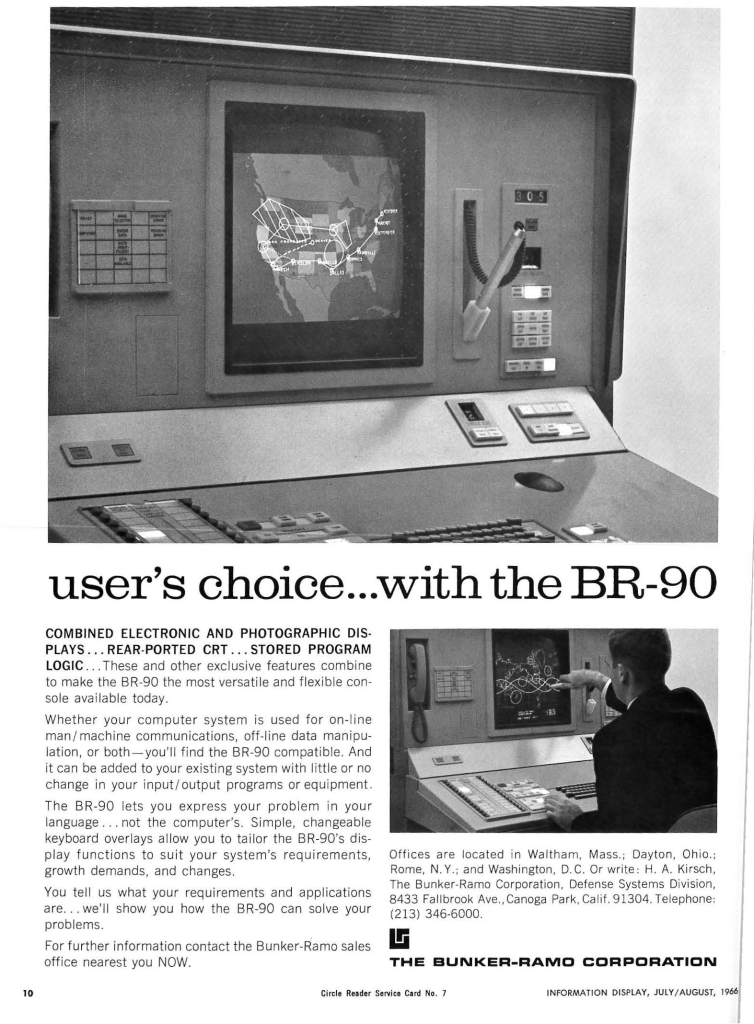

The first company who reached this conclusion came out of the Project SAGE, the successor of the Whirlwind computer from which many other computer innovations sprung (like the modem). In 1965, east coast company Bunker-Ramo was tasked with designing an updated version of the command stations for the SAGE system. Out of this, they created a terminal dubbed the AN/FYQ-37 which integrated stored programming into its terminal functions. The exact reasons aren’t precisely known, though it is likely that it had to do with the concept of distributed systems which eventually powered projects like the ARPANET. Bunker Ramo offered this design as the Model 90 and started the intelligent terminal business.

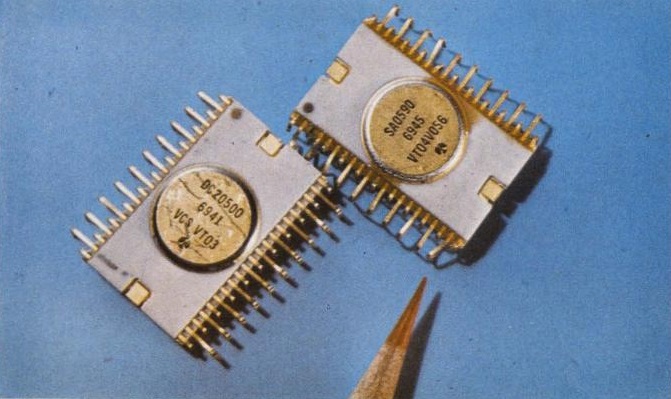

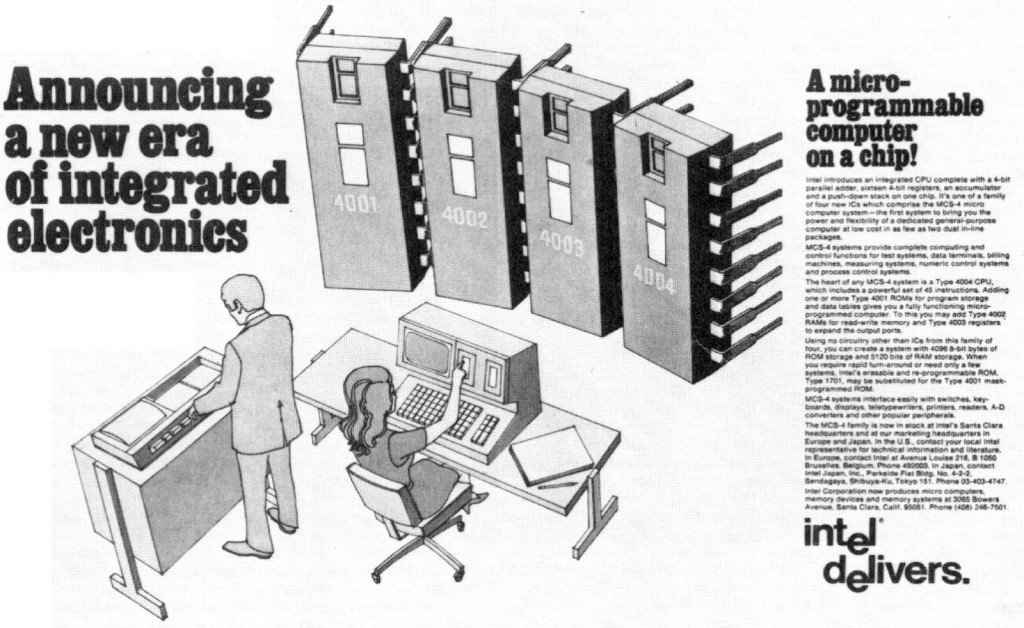

The BR-90 was an isolate, almost entirely due to its sheer size and expense. Yet another factor was crucial to the development of standalone terminal systems – the miniaturization of computer components. Integrated circuits hastily altered computer design in cost and surface area and soon made for a startling possibility, which the electronics trades began to refer to as the “computer on a chip.” Finally recognizing the maxim of Moore’s Law which was formalized not long before, the recognition that a CPU would be condensed down to a single chip drove many assumptions about computer design in this period. And if the chips were going to be so small, why couldn’t an entire computer fit into the form factor of its I/O device?

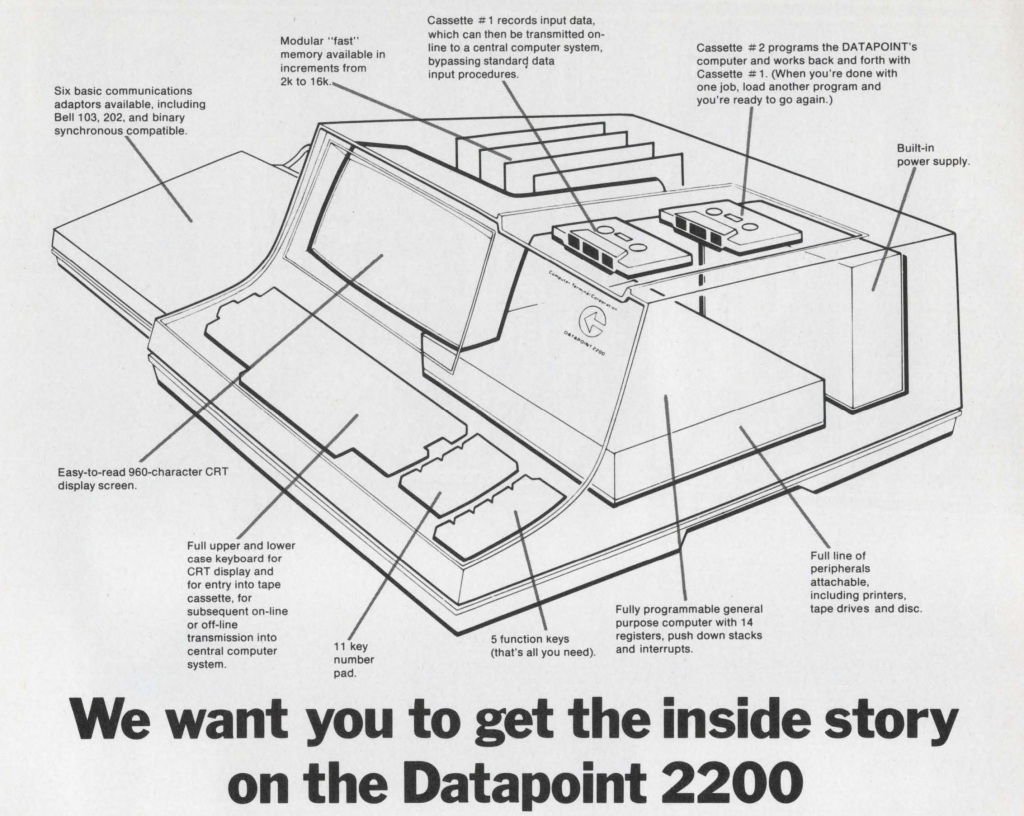

Leading this new way of thinking was Fairchild spin-off Four Phase Systems. Not only did they promise to use cutting-edge chip fabrication to create a computer in just a few integrated circuits, they promised to put this into a programmable terminal. Their Model IV/70 was essentially a proof of concept for a computer on a chip, able to be disconnected from the mainframe while still offering an integrated computing experience to an individual user. Though Four Phase publicized this vision, they were actually late in offering the smart terminal experience to the mass market. Instead they were beaten in 1970 by at least two products, the Datapoint 2200 and the Incoterm SPD 10/20.

Both terminals were created by experienced designers of visual displays, with the capability of working with stored programs off the grid. In retrospect, people at both companies have spoken of customers who used the devices without actually ever connecting to a central computer. This was personal computing in a way only a few other devices had provided the capability for previously. These computer created a new, versatile category for visual displays which solved many of the issues of “dumb terminals” and forged a potential future for ordinary people to be able to access computing power outside of a business setting.

However, there was one massive flaw to smart terminal proliferation: The production on these machines was slow. The standard business models for computer peripherals was to get large orders from companies and to produce that at scale per order, usually with a ninety day window before delivery. With intelligent terminals, individual clients were seeking to get single units. According to Incoterm designer Neil Frieband, it simply wasn’t worth it to send them out to single users, despite the demand. Plus, the prices still ranged near $10,000 for an individual terminal despite the cost reductions by miniaturization.

All of these factors helps to explain why Intel’s microprocessor was such a natural conclusion. Though the Intel 4004 came out of the calculator market, the 8008 was actually based on the architecture developed for the Datapoint 2200. Though the project was moved out of Intel, the concept of a single-chip was baked into the terminal field. The very term “microprocessor” was first broadly used in the context of terminal devices and seemed like a natural fit. There was a significant issue in power – early microprocessors were not capable of taking on the mathematical load of most graphical terminals. Many people are more comfortable calling them microcontrollers for this reason.

So the terminal field continued to develop using high-powered large-scale integration chips. The Imlac PDS-1D, the Beehive Medical’s Super Bee, DEC’s GT-40 (host of the game Moonlander). These models and more all existed before the kit market for microprocessors began. The early kits were functionally rather useless didn’t even have any capability to hook up to a useful output. Smart terminals were a better way of accessing computing power – but they were inaccessible. Even once microprocessors were powerful enough to be used in terminals, that didn’t bring down the price all that much. The bespoke component of a glass terminal simple made no sense for a standard consumer to buy. Let alone the phoneline costs to hook it into a central computer.

More accessible was creating a terminal out of a TV. Don Lancaster’s TV Typewriter – seen by many as the inflection point of the California hobbyist computer scene – was made possible by the cost reduction of character display generators (more than likely a result of the calculator market). While a TV didn’t have ability to create crisp vector lines nor did the display chips offer much promise in terms of visuals, the subsidization of computer power into a standard CRT was the revelation needed to spark the revolution. The same was true of video games in this same time period. If either had to wait for the specialized technology to become cheaply available, it would have not happened in the time period it had.

That is the crux of this examination. The hobbyist style of personal computing was not an inevitable paradigm – in fact there was more working against it than for it in the early days. Second generation microcomputers (Sphere 1, Sol-20) still cost around a thousand dollars to be functional, and they too relied on the display chips developed for terminals. The likes of the Apple II had to jump through hoops with FCC regulations to harness the game-changing power of a CRT television. Eventually the new paradigm developed: Those seeking to engage with software on the open market went with microcomputers, those who wanted to engage with data on a timeshared system used terminals.

The dominance of the new Personal Computer did not spell the end of the terminal market. Both smart and dumb terminals continued to be used in the timesharing context, with iconic designs like the DEC VT100 and the Lear Siegler ADM-3A becoming the new staple of computer labs as they eventually discarded their teletype systems. Early on, there were attempts to merge the timesharing model and the microcomputer as a terminal. Services like The Source, Compuserve, and the commercial implementation of PLATO all attempted to create the consumer online services which mainframe companies saw as their way to the mass market.

It is worth remembering early visual displays in the history of widespread computing. So much of what made the early microcomputers possible came out of the work done in the terminal market. Early use cases of smart terminals presaged what people would do with the consolidated power of personal computers. Large amounts of the early on-line infrastructure was developed with the concepts of the terminal in mind. Through this new context, we can understand where the Silicon Valley computer mode came from. It wasn’t a revolution invented out of whole cloth – it was a change in the objectives of computing for the masses.

Thanks to Neil Frieband for his insight.